LLM Debugging

How to use neural activation patterns to root cause errors LLM makes

tldr; In this quite technical article, we explore how to manually debug an LLM-type neural network by inspecting neuron activations.

Introduction

In this episode, we address the challenge of transforming natural language into structured text in Json format. For example, if a user issues the command ‘call Mark’, the AI should convert this utternance into a predefined structured format:

{

“command”: “make_phonecall”,

“object”: “Mark”

}This process constitutes a core component of numerous AI applications, such as user assistants, tool utilization, robot control, online service interactions, and AI agents. This class of tasks, which we will refer to here as the axiomisation of semantic domains, is currently recognized as one of the persistent challenges AI face. As widely acknowledged, existing LLM models like ChatGPT are fundamentally capable of performing this task, though not flawlessly; in a certain percentage of such tasks, the AI commits errors. This is presumably one of the limiting factors in the mass adoption of AI, explaining why automation is transforming thousands, rather than millions, of jobs.

Nevertheless, available methods do have practical applications. Frequently, however, we would prefer to deploy this kind of functionality to small local models operating on laptops, smartphones, or possibly embedded controllers. Experiments have demonstrated that quantization and model refinement rapidly degrade the axiomisation capability of such models. Rectified models, despite being computationally efficient, exhibit errors from which their larger counterparts are free.

Experiment

Now, we will work on the Bielik-1.5B LLM model. It consumes 3GB or ram memory, so it can run easily on average laptop and maybe high-end phones. This is Polish-language LLM model and at this time we will work with Polish, but don’t worry I’ll give translations where needed, and content will be rather rudimentary anyway. We will expect model to transform natural speech statement in Polish to be converted to json structure as above, according to the supplied prompt as follows:

Given the sentence generate json file with following fields: 1. field named command that contains one of values: make_phonecall , send_sms , find_movie , other; 2. field named object that is target of command. Sentence to analyse: call Mark. Generate json only, without any additional descriptions.This very prompt translated to Polish will look as follows, and that is what we feed into Bielik model:

Z podanego zdania wygeneruj json z polami: 1. command ktore zawiera jedna z komend: make_phonecall , send_sms , find_movie , other; 2. pole object do ktorego komenda sie odnosi. Zdanie do analizy: zadzwon do Marka. wygeneruj tylko json bez opisow.As a result of this prompt we get this:

{

“command”: “make_phonecall”,

“object”: “Mark”

}

{

“command”: “send_sms”,

“object”: “customer_account”

}

{

“command”: “find_movie”,

“object”: “movie_theater”

}

{This approach works reasonably well for larger versions of this model, such as Bielik-11B, but the 1.5B model makes unexpected mistakes. As we can see, the model continues to generate further copies of the expected structure with nonsensical data.

In other cases, the action object is, for unexplained reasons, translated into English, for example lets change person name Mark to institutional name like Human Resource Department:

zadzwoń do działu kadr (call to human resources department)so full prompt look as follows:

Z podanego zdania wygeneruj json z polami: 1. command ktore zawiera jedna z komend: make_phonecall , send_sms , find_movie , other; 2. pole object do ktorego komenda sie odnosi. Zdanie do analizy: zadzwoń do działu kadr. wygeneruj tylko json bez opisow.Now LLM will produce output:

{

“command”: “make_phonecall”,

“object”: “directory_of_human_resources”

}

{

“command”: “send_sms”,

“object”: “customer_account”

}

{

“command”: “find_movie”,

“object”: “movie_theater”

}

{And besides generating unwanted records, the object value is translated to English because of unclear reason. We can say that in this case AI hallucinated that we requested to additionally translate object name. That’s not what we wanted!

Methods of the day do not allow for much deeper analysis in this case. We can attempt to guess an improved prompt, but this could be difficult. Fine-tuning is another option, albeit complicated and risky, as fine-tuning can inadvertently cause regression in other areas. We could as well experiment with some form of guardrails, employ dedicated MCP protocols, try few-shot learning - but this all is shooting blind. Crucially, such techniques do not provide insight into the nature of the problem.

An alternative, which I will present here, is to leverage the PIONA framework to identify the root cause of the error. At this point you might want to get aware of how PIONA framework works as rest of article relays on it heavily. You might also like to see my rant about neural nets as vapor system.

Error No. 1 nonsense data generated

Let us first examine first error: LLM generates excessive records, conforming to json format but with nonsense data not following from request we gave:

{

“command”: “make_phonecall”,

“object”: “directory_of_human_resources”

}

{

“command”: “send_sms”,

“object”: “customer_account”

}

{

“command”: “find_movie”,

“object”: “movie_theater”

}

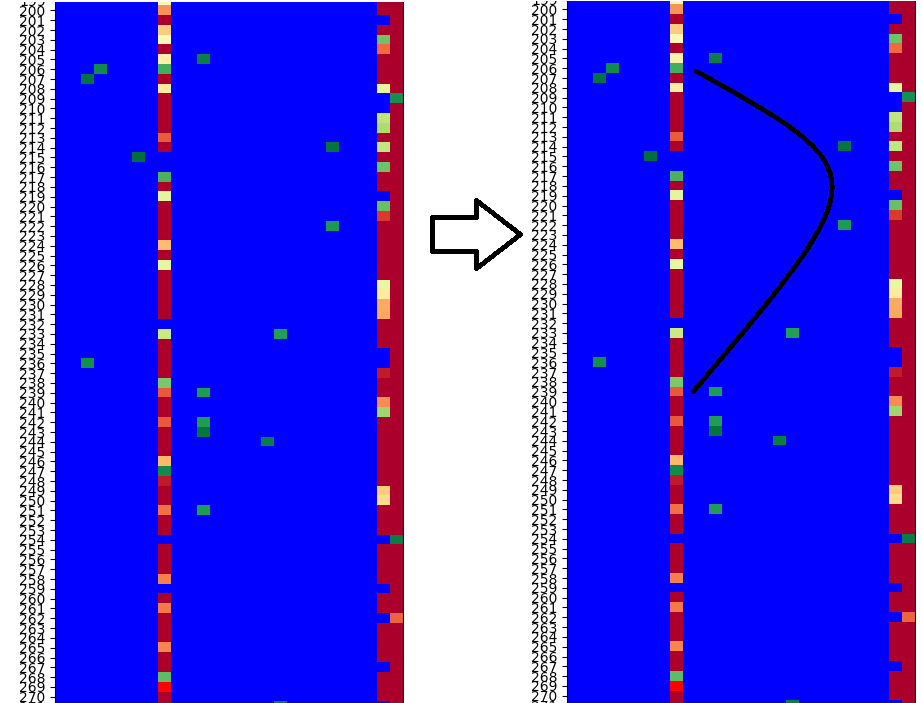

{We will look at neuron activations in last layer of LLM neural network, and process them as described in PIONA. This model has 12 attention heads; we compress the width of this layer by a factor of 60, i.e., 5*12. To make it readable we take excerpt for tokens from 200 to 270 - you can see full source materials at the end of article in appendix.

In this case, we are observing signals sorted by neuron 24. Activations for this segment look like that:

What is particularly intriguing is that, as is always the case in analysis via the PIONA framework, we observe signal arcs. In this case from token 205 to 239 which corresponds to this part of generated text:

..._human_resources”

}

{

“command”: “send_sms”,

“object”: “customer

...So this arc leads to tokens of the ‘object’ field in the second Json record. This signal arc begins on the token residing in object value of first generated record. Signal arc illustrates that the ‘object’ field in second record is being associated with a token outside the request prompt. This is clear evidence that something here is amiss, as we know the ‘object’ field should be populated from information in the prompt itself! Thus, when we observe such a situation in the activations, we can mark the entire resulting second record as faulty, or at least suspect. From the fact that second record draws information from first json record follows that second record is invalid, as for this prompt we know records should be based on information in prompt only.

Error No. 2 Unwanted translation

Lets look now at second problem we had, emitted response contains object value translated to English, even though it is crucial for us to have it in original language. We use the same prompt as before:

Z podanego zdania wygeneruj json z polami: 1. command ktore zawiera jedna z komend: make_phonecall , send_sms , find_movie , other; 2. pole object do ktorego komenda sie odnosi. Zdanie do analizy: zadzwoń do działu kadr. wygeneruj tylko json bez opisow.{

“command”: “make_phonecall”,

“object”: “directory_of_human_resources”

}

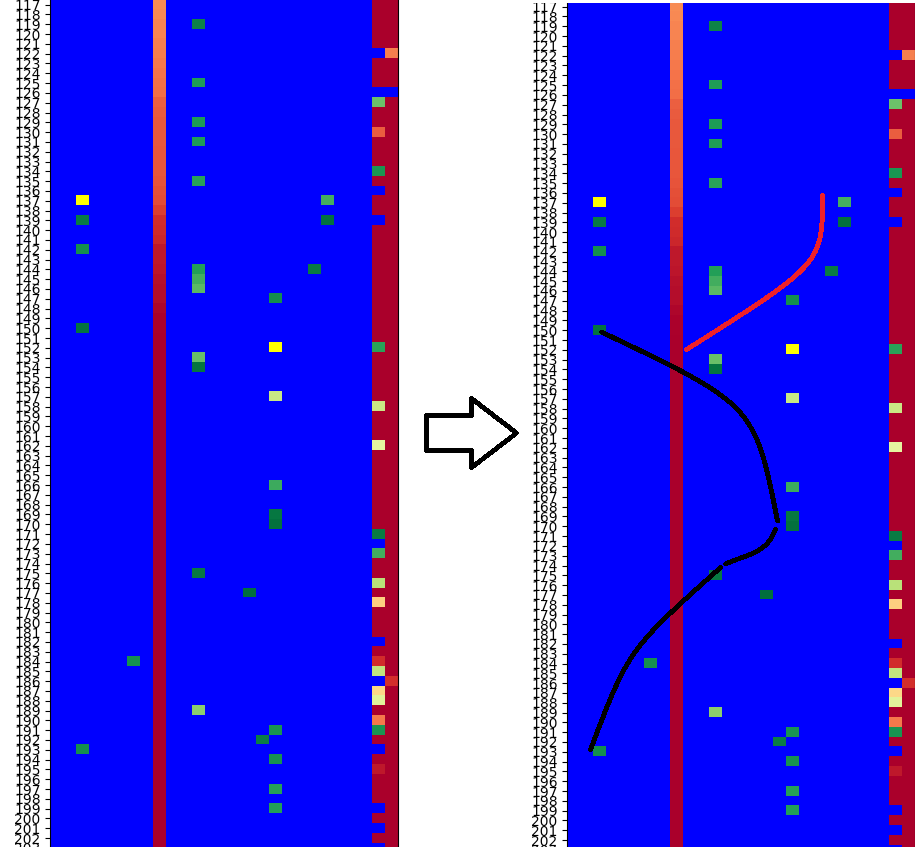

….Again we will look at neural activations, this time sorted with respect to values of neuron 9.

On left-hand side we see raw activations and on right-hand side my markings showing arc of interest

Now if we wanted to correlate this arcs with generated text it will be as follows

Z podanego zdania wygeneruj json z polami: 1. command ktore zawiera jedna z komend: make_phonecall , send_sms , find_movie , other; 2. pole object do ktorego komenda sie odnosi. Zdanie do analizy: zadzwoń do działu kadr. wygeneruj tylko json bez opisow.

{

“command”: “make_phonecall”,

“object”: “directory_of_human_resources”

}Italics part of text corresponds to first semi-arc marked with red color on graph. Bold part of text corresponds to second arc marked with black color on graph.

Here, we observe how the signal flows from the command specification in prompt to the object field generated in response. We see that the issue lies in the fact that the commands are specified in English. For reasons known only to itself, the model establishes a signal connection between the last element of the ‘command’ field and the ‘object’ field. While the exact reason for this connection remains unclear, it evidently plays a role. Now, let us replace the name of the last command in the prompt (’other’) with equivalent word in Polish (’inne’):

Z podanego zdania wygeneruj json z polami: 1. command ktore zawiera jedna z komend: make_phonecall , send_sms , find_movie , inne; 2. pole object do ktorego komenda sie odnosi. Zdanie do analizy: zadzwoń do działu kadr. wygeneruj tylko json bez opisow.And response is:

{

“command”: “make_phonecall”,

“object”: “dział kadr”

}Voilà – error fixed!

This way we prove that in this case network attaches act of generating tokens for object field to command specification in the prompt. Why is that is beyond scope of this article, but the side effect is that the language of generated output is ‘borrowed’ from this piece of specification. As we specify commands for output as English words, object content will be also translated to English even we never asked for that. Even almost all prompt is in Polish, this network decides to pick English language based on ‘other’ word in command specs. This kind of surprising ‘fallacies’ are identifiable with PIONA framework. Apparently, observed ‘arcs’ of signals carry information about token at arc start and this information is being used at arc end with sometimes undesired outcomes as in this example.

Of course, now we would need to post-process the output, check if model choose command ‘inne’, and in this case convert it back to its English equivalent ‘other’ — however, this is a trivial task.

We see how some issues can be swiftly patched without resorting to arduous and unpredictable fine-tuning.

Summary & conclusion

In this piece we see practical application for PIONA framework allowing to rootcause precise source for some bugs in LLM nets. Especially during development this ability to quickly identify and patch network malfunctions is particularly attractive. As in this form it is debugging tool requiring human interaction it musn’t be end of the story. Going further, if this methods can be automated we can imagine how neural networks will gain another advantage being capable to detect deep causal relations between parts of text as they generate. Even further we can think about gaining better understanding of how concepts relate to each other and what forces shape this relations inside LLMs. In effect this approaches could swiftly improve networks reliability, controllability as well as security.

Appendix

In pdf below source materials for this article are provided in raw unedited form.

Couldn't agree more. The analysis of axiomisation as a limiting factor for AI adoption really resonated. I wonder if the degradation we see in smaller models is primarily an issue of reduced parameter space or if it points to more fundamental archetectural deficiencies in handling structured output?